Gemini 3.1 Pro: Google Unveils Upgraded Model for Complex Reasoning Tasks

New Version Doubles Prior Benchmark Performance and Expands Access Across Consumer and Enterprise Tools

What Happened

Google has launched Gemini 3.1 Pro, an upgraded core intelligence model designed for complex reasoning tasks across science, engineering, research and enterprise workflows.

The release follows last week’s update to Gemini 3 Deep Think. According to the Gemini team, 3.1 Pro provides the foundational reasoning improvements that power those advanced capabilities.

The model is being rolled out in preview across multiple platforms:

Developers via the Gemini API in Google AI Studio

Gemini CLI

Google Antigravity (Google’s agentic development platform)

Android Studio

Enterprises through Vertex AI and Gemini Enterprise

Consumers via the Gemini app

NotebookLM (for Pro and Ultra subscribers)

Google says Gemini 3.1 Pro represents a significant step forward in core reasoning ability compared to its predecessor, Gemini 3 Pro.

On the ARC-AGI-2 benchmark — which measures a model’s ability to solve unfamiliar logic problems — 3.1 Pro reportedly achieved a verified score of 77.1%, more than double the reasoning performance of Gemini 3 Pro.

What Is New

Google positions 3.1 Pro as a smarter baseline model for tasks where a simple answer is insufficient.

The company emphasizes improvements in:

Multi-step reasoning

Complex problem-solving

Data synthesis

Creative technical outputs

Agentic workflows

One example highlighted by Google is the model’s ability to generate animated SVG graphics directly from text prompts. Unlike pixel-based video, SVG animations are code-based, scalable without quality loss, and file-size efficient — making them web-ready assets.

The upgrade aims to make advanced reasoning practical in everyday use cases, including:

Visual explanations of technical subjects

Summarizing and unifying complex datasets

Engineering design assistance

Creative prototyping

Code generation and structured outputs

Google states that 3.1 Pro will serve as the intelligence core across consumer apps and developer platforms moving forward.

What Is Analysis

1. Benchmark Gains vs. Real-World Performance

The ARC-AGI-2 score of 77.1% is notable because the benchmark tests abstract reasoning rather than memorized patterns. Doubling prior performance suggests improved generalization ability.

However, benchmark gains do not always translate directly into everyday productivity improvements. The real measure will be:

Reliability in edge cases

Reduction of hallucinations

Stability in multi-step tasks

Performance in agent-driven workflows

Google is releasing the model in preview to validate performance under real-world usage before general availability.

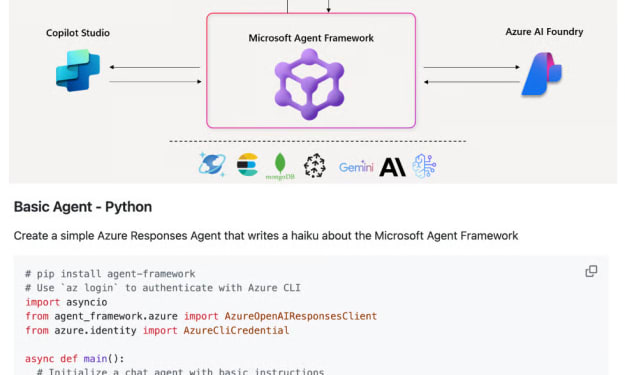

2. Strategic Shift Toward “Agentic” AI

Google continues emphasizing agentic development — AI systems that can autonomously perform structured sequences of tasks rather than simply generate text responses.

By integrating 3.1 Pro across:

Gemini CLI

Antigravity

Vertex AI

Enterprise environments

Google is signaling that future competition in AI will focus less on chatbot conversation quality and more on task orchestration and execution.

This aligns with industry-wide trends toward AI agents capable of coding, automating workflows, and interacting with external systems.

3. Tiered Access and Monetization

Access to Gemini 3.1 Pro is being gated strategically:

Consumer users require Google AI Pro or Ultra plans

NotebookLM access is limited to premium subscribers

Enterprises gain preview access via Vertex AI and Gemini Enterprise

This suggests Google is positioning 3.1 Pro as a premium intelligence layer, monetizing higher reasoning capability while maintaining baseline models for free users.

4. Competitive Context

The launch comes amid intensified competition in advanced AI reasoning models. Improvements in logical abstraction benchmarks such as ARC-AGI-2 are increasingly used as signals of frontier-level model capability.

While Google does not directly reference competitors, the messaging emphasizes “core reasoning breakthroughs,” implying a race toward models capable of deeper structured thinking rather than surface-level generation.

The Bigger Picture

Gemini 3.1 Pro represents less a cosmetic upgrade and more a foundational refinement of reasoning architecture.

If performance claims hold under widespread deployment, 3.1 Pro could:

Improve reliability in research and engineering contexts

Enable more advanced agentic systems

Expand AI’s use in structured enterprise environments

Narrow the gap between conversational AI and task automation systems

However, the preview status indicates Google is still refining behavior in complex workflows before broader release.

The key question is not whether 3.1 Pro is smarter on paper — but whether it demonstrates measurable gains in:

Reduced hallucination rates

Long-chain reasoning stability

Safe autonomy in agent systems

Cost-efficient scaling

Google has framed this as the intelligence backbone for its next phase of AI tools.

Now, developers and enterprises will test whether that promise translates into consistent, real-world performance.

Comments

There are no comments for this story

Be the first to respond and start the conversation.