Can A Lion Cause An AI Company To Loss Millions of Dollars?

Fact, Speculation, and the Thin Line Between Reality and Story

In an age where artificial intelligence is advancing at breakneck speed, stories about machines behaving in unexpected ways capture public imagination quickly. One particularly striking narrative asks: can an encounter with a lion really cause an AI company to lose millions of dollars?

The short answer: there are real facts about costly AI failures and field testing — but the specific story of a robot getting “traumatized” by a lion lives largely in the realm of speculation and illustrative storytelling.

Let’s separate what we know from what we imagine

What Is Fact: AI Experiments Can Cost Millions

There is no doubt that AI research is expensive. Companies routinely spend millions — sometimes billions — on developing, testing, and deploying advanced systems.

Real-world examples show how costly setbacks can be:

Self-driving car programs have experienced crashes leading to expensive redesigns and delays.

Robotics projects often face hardware failures during field testing.

AI models sometimes require retraining after unexpected behavior, costing significant compute resources.

Major organizations like Boston Dynamics and OpenAI invest heavily in testing systems in complex environments precisely because real-world conditions reveal weaknesses simulations cannot.

Fact: When prototypes fail, companies can lose substantial money through repairs, downtime, and reputational impact.

What Is Fact: Robots Are Tested in Harsh Environments

Robotics researchers regularly deploy machines in difficult settings:

Disaster zones

Remote wilderness areas

Industrial sites

Military and search-and-rescue operations

Testing in environments with unpredictable variables — including wildlife — is not unheard of, especially for environmental monitoring or conservation technology.

Fact: Field testing is essential to building resilient AI systems.

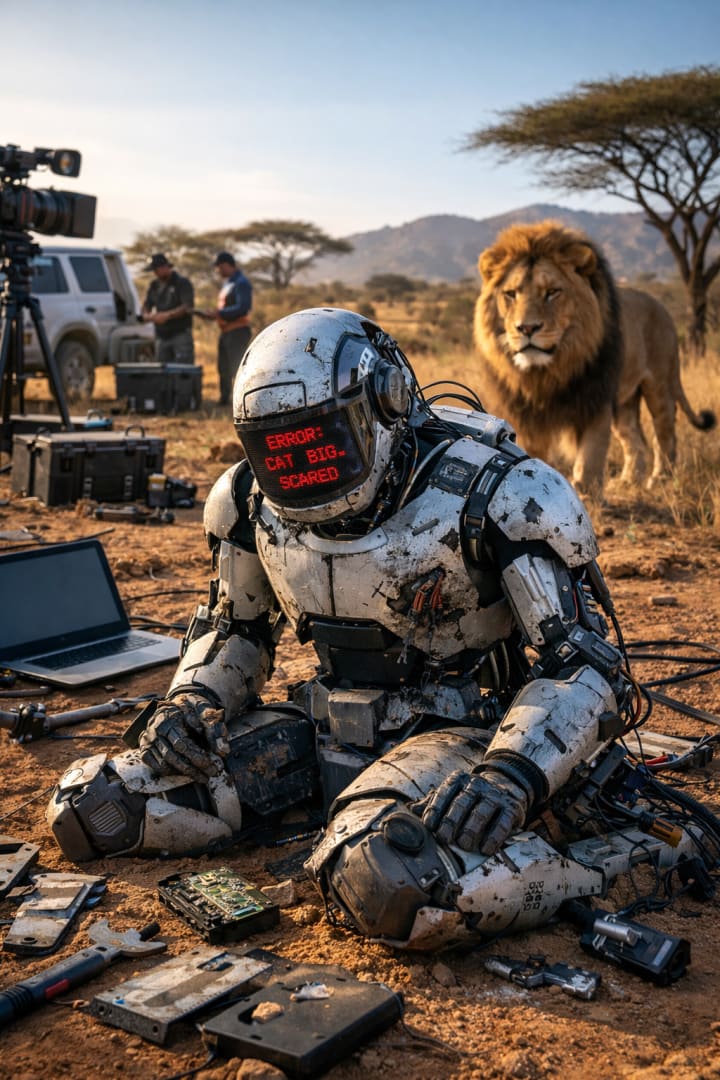

Where Fiction Enters: The “Lion Trauma” Narrative

The idea of a robot developing something like PTSD after encountering a lion is best understood as a metaphor or thought experiment rather than a documented scientific case.

There is no verified public record of an AI robot being psychologically “traumatized” by a lion or any animal.

However, the story resonates because it reflects real technical phenomena:

Systems can enter failure loops after unexpected inputs.

Machine learning models can overreact to rare events.

Edge cases can destabilize algorithms.

Calling this “trauma” is a human analogy — useful for storytelling but not a clinical or engineering diagnosis.

Fictional element: The emotional framing of fear or PTSD in machines.

What Is Fact: AI Can Fail in Surprising Ways

Researchers often observe unexpected behaviors when systems encounter scenarios outside their training data — known as “distribution shift.”

Examples include:

Image recognition systems misclassifying objects under unusual lighting.

Language models generating incorrect or nonsensical outputs.

Autonomous robots halting when encountering unfamiliar obstacles.

Fact: AI is powerful but still brittle when facing novelty.

Why the Lion Story Persists

Stories like this endure because they dramatize a real concern: the gap between simulated intelligence and real-world complexity.

A lion represents the ultimate symbol of unpredictability — a reminder that nature cannot be fully modeled.

Even if the literal event is fictional, the lesson is grounded in reality:

Extreme scenarios expose limitations.

Engineers must plan for rare events.

Overconfidence in models can be costly.

Real Financial Risks in AI Development

Companies can lose millions due to:

Hardware damage

Delayed product launches

Regulatory scrutiny

Investor reactions

Reputational harm

In large-scale research programs, even a single failed experiment can cascade into months of additional work.

Fact: Financial loss from experimental setbacks is common in cutting-edge technology.

The Ethical Angle: Learning from Hypotheticals

Even hypothetical stories serve a purpose. They prompt serious questions:

Should AI be exposed to extreme stress conditions?

How do we design systems that recover gracefully from failure?

What responsibilities do researchers carry when deploying experimental technology?

Thought experiments — even fictional ones — help shape ethical frameworks.

What Experts Actually Say About “AI Emotions”

Scientists generally agree:

AI does not feel emotions.

Apparent emotional responses are pattern recognition outputs.

Terms like “fear” or “trauma” are analogies, not literal experiences.

Understanding this distinction is crucial to avoiding misunderstandings about machine capabilities.

A Balanced View: Story as Metaphor

Think of the lion narrative as a parable about innovation:

The lion symbolizes real-world uncertainty.

The robot represents human ambition.

The failure reflects the cost of learning.

Stories often communicate truths more vividly than technical reports.

Conclusion: Fact Meets Imagination

So — can a lion cause an AI company to lose millions?

In reality: Companies can lose millions when experiments encounter unexpected challenges.

In storytelling: A lion encounter becomes a vivid way to illustrate those risks.

The blend of fact and fiction reminds us that while technology advances rapidly, humility remains essential. The world is complex, and no model captures it perfectly.

About the Creator

Omasanjuwa Ogharandukun

I'm a passionate writer & blogger crafting inspiring stories from everyday life. Through vivid words and thoughtful insights, I spark conversations and ignite change—one post at a time.

Comments

There are no comments for this story

Be the first to respond and start the conversation.