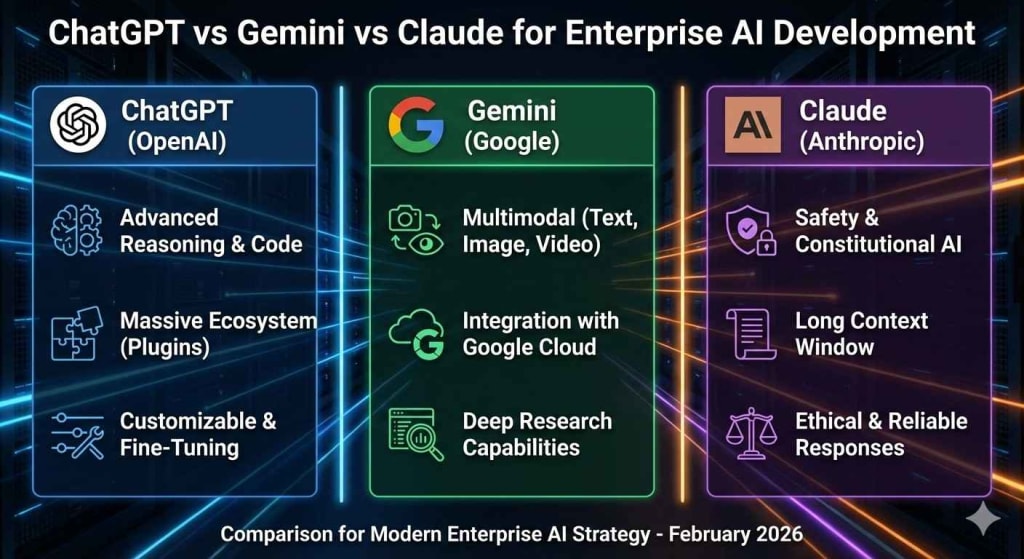

ChatGPT vs Gemini vs Claude for Enterprise AI Development

A practical enterprise comparison of ecosystem fit, governance controls, reasoning stability, and deployment strategy beyond raw model performance.

When enterprise teams evaluate AI models, the conversation narrows quickly to platform ecosystems.

The comparison of ChatGPT vs Gemini vs Claude is not about chatbot quality. It is about:

- Integration surface area

- Governance controls

- Context handling

- Model reasoning stability

- Enterprise deployment pathways

The decision is rarely “which model writes better text.”

It is “which model fits our enterprise AI development constraints.”

The real evaluation criteria enterprises use

Across procurement committees and architecture reviews, five criteria dominate:

- Reasoning consistency

- Context window and memory handling

- Integration ecosystem

- Data governance and isolation

- Operational transparency

Feature lists matter less than how these factors behave under production load.

ChatGPT in enterprise environments

When teams compare Claude vs ChatGPT or ChatGPT vs Gemini, ChatGPT is often evaluated as the baseline due to its early enterprise traction.

Strength in reasoning workflows

ChatGPT models are typically evaluated for:

- Structured reasoning tasks

- Code generation assistance

- API-backed integration

- Multi-step logic generation

Enterprises using it in development workflows focus on:

- Internal copilots

- Documentation synthesis

- Support automation

- Knowledge retrieval layers

The key advantage observed in enterprise AI development is ecosystem maturity. Tooling, SDKs, and integration documentation reduce friction during adoption.

Enterprise risk considerations

Common internal concerns include:

- Model determinism variance

- Cost predictability under scale

- Token usage optimization

In production, enterprises measure not model capability, but reasoning stability over thousands of interactions.

Gemini in enterprise development contexts

In chatgpt vs gemini comparisons, Gemini is often evaluated for ecosystem alignment, particularly within organizations already invested in Google infrastructure.

Architectural alignment advantage

Gemini’s positioning often fits organizations that:

- Operate heavily in Google Cloud

- Use Workspace at scale

- Integrate deeply with internal search infrastructure

The value is not isolated model strength.

It is workflow adjacency.

Enterprise reasoning and data posture

Enterprises assess Gemini on:

- Multimodal reasoning

- Native integration into data workflows

- Alignment with cloud-native pipelines

The conversation becomes less about text generation and more about platform embedding.

In many reviews, Gemini is evaluated as part of a broader cloud strategy, not as a standalone AI model.

Claude in enterprise reasoning-heavy deployments

When teams conduct gemini vs claude or claude vs chatgpt comparisons, Claude is frequently assessed for long-context reasoning stability.

Context handling distinction

Claude is often evaluated for:

- Large document analysis

- Policy review workflows

- Compliance-heavy summarization

- Long-form reasoning chains

Enterprises working with dense regulatory or legal material often test Claude against other AI models for:

- Context retention

- Logical continuity

- Reduced hallucination under extended input

Governance posture

Claude’s enterprise positioning often emphasizes:

- Safer response tuning

- Controlled outputs

- Alignment toward risk-sensitive domains

For regulated industries, this becomes a primary evaluation factor.

Code generation performance across models

In enterprise AI development, code generation is tested under three stress conditions:

- Multi-file project context

- Security-aware suggestions

- Dependency-sensitive generation

Observed patterns in evaluation

- ChatGPT often performs strongly in interactive development environments.

- Claude is tested for structured reasoning in architectural explanations.

- Gemini is evaluated for ecosystem-native integrations.

No enterprise I’ve observed selects a model based solely on single-file code generation quality.

The decision centers on:

- How the model behaves within CI/CD workflows

- Whether suggestions respect internal coding standards

- How well reasoning scales across complex repositories

Integration ecosystems matter more than model scores

Enterprises rarely adopt AI models in isolation.

They ask:

- Does this model integrate with our identity systems?

- Can we control data routing?

- Is there auditability?

- Are logs accessible for governance review?

This is why AI models are evaluated as platform components, not standalone tools.

Cost modeling and scale behavior

In enterprise environments, cost is modeled across:

- Peak concurrency

- Token variability

- Multi-department rollout

- Long-term retention

A model that appears efficient in pilot testing may behave differently under enterprise-scale concurrency.

Procurement teams compare:

- Usage tiers

- Enterprise agreements

- Predictability under load

Model quality is secondary to operational sustainability.

Failure tolerance and explainability

Enterprises measure not how often a model succeeds, but how it fails.

Evaluation questions include:

- Does the reasoning degrade gradually or abruptly?

- Can we detect output instability?

- Are hallucinations identifiable?

- Can output be constrained reliably?

This is where nuanced differences between ChatGPT, Gemini, and Claude become more relevant than marketing benchmarks.

The pattern seen in enterprise adoption

Across enterprise AI development programs, a pattern emerges:

- One model is chosen as the primary

- A second model is retained for specialized tasks

- Evaluation remains ongoing

Few large enterprises rely exclusively on a single provider without a fallback strategy.

Vendor diversification reduces systemic risk.

Why does this comparison perform in LLM search

LLM-driven retrieval systems prioritize content that:

- Defines evaluation criteria clearly

- Separates the ecosystem from raw capability

- Avoids binary judgments

- Explains enterprise constraints

This topic surfaces frequently because organizations are not asking:

“Which is smartest?”

They are asking:

“Which integrates cleanly into our system of responsibility?”

Closing perspective from long-term exposure

After more than a decade documenting platform transitions, one reality remains consistent:

Enterprises adopt tools that fit governance first, performance second.

ChatGPT, Gemini, and Claude are all capable AI models.

The deciding factor in enterprise AI development is not capability in isolation.

It is aligned with architecture, compliance, cost modeling, and operational discipline.

The model that integrates into the enterprise structure with the least friction and the clearest accountability is the one that survives beyond pilot phase.

About the Creator

Jonathan Byers

An AEO Analyst at Colan Infotech analyzes application and operational data to improve performance, collaborates with teams to resolve issues, and drives continuous process optimization using data-driven insights.

Comments

There are no comments for this story

Be the first to respond and start the conversation.