Can Smodin Text Pass Other AI Detectors? I Tried It

Notes from a hands-on test during exam season

I hear this question more often than students admit out loud. They do not ask whether AI tools exist anymore. They ask whether different systems talk to each other, whether one detector agrees with another, and whether rewriting tools leave a recognizable trace. As a teacher who reads hundreds of pages every term, I decided to test the concern instead of speculating.

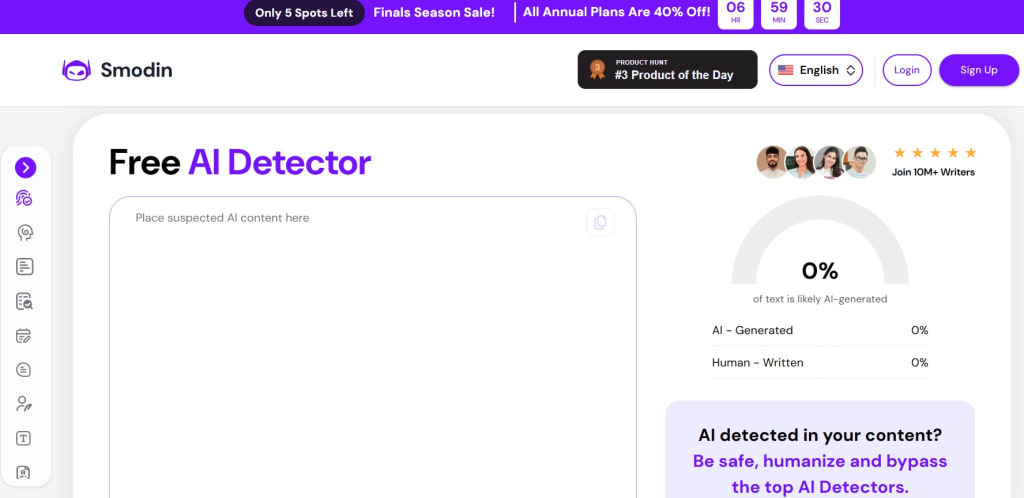

The experiment started after I read coverage about exam season transparency and the growing availability of detection tools, including an article describing the Free AI Detector initiative that expanded access during finals week. That moment felt like the right time to stop guessing and actually run texts through several systems to see what happens when Smodin enters the mix.

Why I Decided to Test This Myself

Students often assume detectors share a single hidden database. In reality, most tools rely on different signals, training data, and thresholds. That difference matters in practice.

I wanted to know whether text rewritten with Smodin would trigger the same response everywhere or whether results varied depending on how each detector reads language. The goal was not to defeat software but to understand its limits from an educator’s perspective.

How I Set Up the Experiment

I began with a short analytical essay written entirely by AI. I kept the topic neutral and academic. After that, I used Smodin to rewrite the text while preserving meaning and structure. I avoided heavy paraphrasing options and focused on clarity and tone.

Once the rewritten version was ready, I tested both texts across multiple popular AI detectors used in academic settings. I ran each sample more than once and spaced the tests over several days to avoid caching effects. I also checked shorter and longer excerpts to see whether length changed the outcome.

What the First Results Looked Like

The original AI generated text triggered high probability scores almost everywhere. That part was predictable. The Smodin rewritten version produced mixed results. Some detectors showed a sharp drop in AI likelihood. Others remained cautious and flagged sections with moderate confidence.

What stood out was inconsistency. One tool labeled the text mostly human while another leaned toward uncertainty. None delivered a definitive green or red light. The results felt closer to how human readers react when a paper sounds polished yet slightly distant.

How Structure Influenced Detection

Short samples behaved differently from longer ones. When I tested paragraphs under one hundred words, detectors swung wildly. As the text approached three hundred words, scores stabilized.

Smodin’s rewriting changed sentence rhythm and transitions. Paragraphs varied more in length. That variation appeared to matter. Detectors that rely heavily on flow and predictability seemed less confident after the rewrite. Others focused more on lexical patterns and remained cautious.

Where Smodin Helped and Where It Did Not

Smodin did not magically erase every signal. Certain phrases still felt engineered, especially in abstract explanations. However, the tool softened repetition and introduced more uneven pacing.

From a teaching standpoint, the rewritten text resembled what I see when students revise thoughtfully. It read as edited rather than generated. That distinction matters because many professors look for process, not perfection. Smodin shifted the text closer to that middle ground.

What This Means for Students and Educators

Passing an AI detector should never be the primary goal. Detectors disagree, and none offer certainty. This test reinforced what many instructors already know. Context matters more than scores.

When a student submits work that aligns with their earlier writing and classroom discussions, suspicion fades. Tools like Smodin can support revision and clarity, but they cannot replace engagement with the material. Detection software remains a reference point, not a verdict.

Final Reflections From the Classroom

After running these tests, I felt less interested in which detector wins and more interested in how students learn to revise responsibly. Smodin helped reshape AI drafts into something closer to human revision, though it did not guarantee invisibility.

For educators, this reinforces a familiar truth. Writing reveals its origins over time, across assignments, conversations, and growth. No detector replaces that long view. And no rewriting tool can fully stand in for a writer who understands their own words.

Comments (1)

Is smodin realy free???